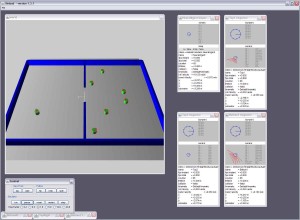

When I really started working on my research several months ago, I started bringing environments together and testing various technologies out and trying to find the right combination of tools for my work. I could have written my own completely but that would have taken far more time and there’s no need to reinvent the wheel. The first tool I went looking for was a virtual robot simulator. There are many tools out there I won’t go over them, but I eventually settled on Simbad (I have included the link to the right). It was a nicely coded, 3d robot simulator. It is easily extensible and written in Java, a language I am comfortable with. So this worked for me.

I won’t talk about all the other tools I am using. I am going to leave that for a history page. What I will do is talk about my very first problem I ran into with the Simbad simulator. This problem made me so angry, I spent a week on it. I tried variations in my code, researched what others had done, read through multiple texts I purchased as a teenager about 3d graphics programming. This problem is directly responsible for me wanting to blog my work.

I needed to make dumb agents (robots) that can turn towards their goal and walk toward it in a straight line.

Sounds easy right? It wasn’t so easy for me. These dumb agents have to behave in a believable way, almost as if they were humans walking in a hallway or busy room. They are required to simulate a training environment for my learning agents. This task seems simple for you and I, but for a dumb robot it is quite diffcult.

I first had to add some ability to the agents to know where a goal is in their environment. That is simple enough, give them a goal point in the world and the means to check their own location in the world. This is the kind of thing we can do with GPS today, so it is fine if a wanna-be-human robot can do it. As a human, how can you tell that you are pointing towards your goal? Well you can look at your compass and it will tell you if the direction is incorrect. That boils down to plotting a straight line from your point to the goal and checking to see if you are pointing parallel to that line. So now you know you are or aren’t going in the right direction which way do you turn to go in the right direction if you aren’t? Well you turn the shortest amount to parallel with the proper direction. But how do you know which way to turn and how if your direction is parallel to the line between you and your goal, how do you know you aren’t heading away from your goal? We relate our directions to North, but the dumb agents don’t really have that luxury.

To solve these problems you have to calculate the distance to goal. That way if your distance to goal is increasing you know you are going the wrong way and you should turn towards the goal. Which way to turn though? Did this even work? No, it didn’t work. The distance calculation comes in handy but it isn’t really a good judge of when to turn and what way to turn. Initially I just told the robot to turn right and if it detected it was getting further away from the goal to turn left. This is great until you are heading directly away from the goal. Then you hop back and forth between left and right turns and never achieve anything. Humans may do this, if they are drunk. Not what I need for my test environment.

So I started calculating the angle between my current position and the goal and what would be my next location and the goal. When these angles dropped below 0.1 radians I knew I was heading in the right direction and could just keep going that way. I still didn’t know what way was the shortest turn and I really couldn’t get the agent to turn completely towards its goal. It would walk in a big circle around its goal or it would meander towards its goal, the inaccuracy of calculating lines just wasn’t working. So I broke out the linear algebra texts and started reading. If you calculate the dot product you can tell if your angle to another point is acute or obtuse. That proved to be handy, I would only run into problems at the 90, 180 and 270 degree marks but if you test for all instances of the dot product and wrap some other logic around it, you can force the agents to turn completely towards their goal. Now we are getting somewhere, but they still always turn right.

Well after much googling I found this very handy tid-bit on a basic 2d graphics website (http://www.geocities.com/SiliconValley/2151/math2d.html) and I want to share this with you, because if anyone out there is feeling this problem like I did, this will make you very happy.

clockwise = ((currentPosition.x-lastPosition.x)*(goal.y-lastPosition.y))-((currentPosition.y-lastPosition.y)*(goal.x-lastPosition.x))

What this does is tell you that the graph that is formed between the last position, current position, and goal are formed in a clockwise or counter-clockwise order. So no matter where you are in the X,Y plane if this calculation is < 0 you want to turn counter-clockwise since the angle of the lines is clockwise and vice versa if it is > 0.

Implement these components in the right order accounting for the 90 degree and 180 degree marks and you will have a nice little robot that turns the shortest (and most human) way towards the goal every time and is very efficient about getting on track to goal even after being bumped out of the way.

That took me forever to get working, and it was just a simple little problem. It really made me want to talk about what I did to solve the problem and some of the thought process. Will it solve your problem? I don’t know, but I hope you enjoyed reading about my pain.